In-game chats are a staple of many gaming experiences. Players love to chat with each other. But it’s no secret that many game chats suffer from colorful language that often includes profanity and personal attacks.

Even though players may tolerate this to a degree, game studios must take action to clean this up. This becomes even more important as the gaming community diversifies along age, gender, and nationality lines.

The good news is most game studios do try to keep their chats clean. However, the players who write the verbal attacks are quick to adapt. They can easily defeat solutions based on keyword filtering, and even can outmaneuver smarter solutions that require extensive model training or labeling.

So the question is, how can you offer real-time chat that is welcoming to all?

In this post, we’d like to show you how to build a solution that does just that. The solution not only provides a real-time, scalable chat solution (PubNub), but also keeps the chats clean of abuse (Tisane).

Quick Intro to PubNub

PubNub empowers developers to build low-latency, realtime applications that perform reliably and securely, at a global scale. Among other offerings, PubNub provides an extensible and powerful in-app chat SDK. The chat SDK is used in a variety of scenarios, from virtual events like the ones hosted by vFairs to in-game chats. It can also be used to create chat rooms in Unity 3D.

Quick Intro to Tisane

Tisane is a powerful NLU API with a focus on detection of abusive content. Tisane detects different types of problematic contents, including but not limited to hate speech, cyberbullying, attempts to lure users out of the platform, sexual advances. Tisane classifies the actual issue, and pinpoints the offending text fragment; optionally, explanation can be supplied for a sanity check or audit purposes. A user or a moderator does not have to figure what part of the message and why caused the alert. Tisane is a RESTful API, using JSON for its requests and responses.

Tisane is integrated with PubNub. Just like with other PubNub blocks, Tisane can be easily integrated in PubNub-powered applications. That includes the applications using the in-app chat SDK.

Using Tisane with PubNub

Now, the fun part. Let’s start coding! Follow these steps to get your Tisane powered PubNub account setup.

- Sign up for a Tisane Labs account here.

- Log on to your account and obtain the API key, either primary or secondary. This API keys goes to the Ocp-Apim-Subscription-Key header with every request.

- Now go back to the PubNub portal and import the Tisane block by following the Try it now link, or clicking here.

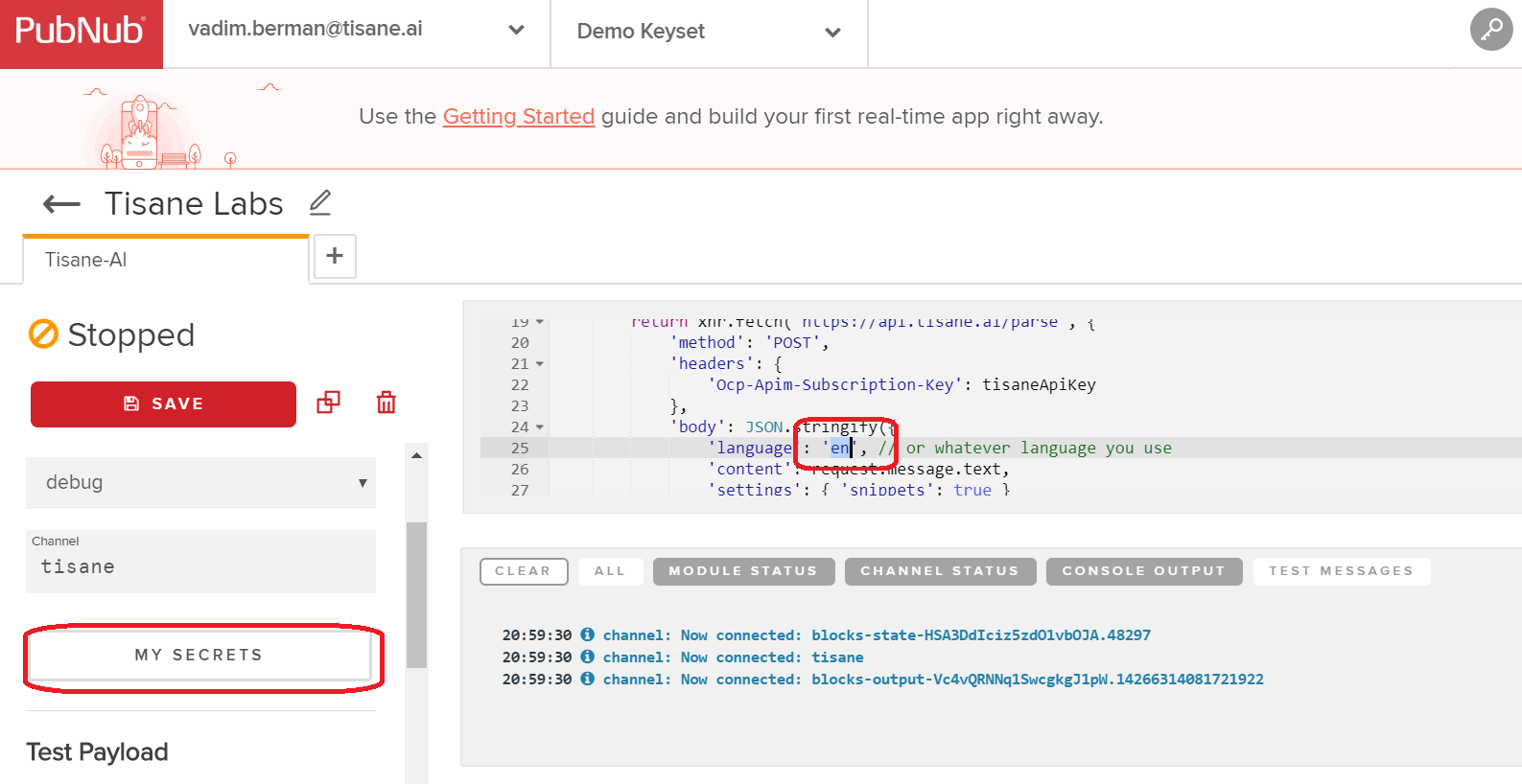

- Add your Tisane API key as tisaneApiKey to the PubNub Functions Vault using the My Secrets button in the editor.

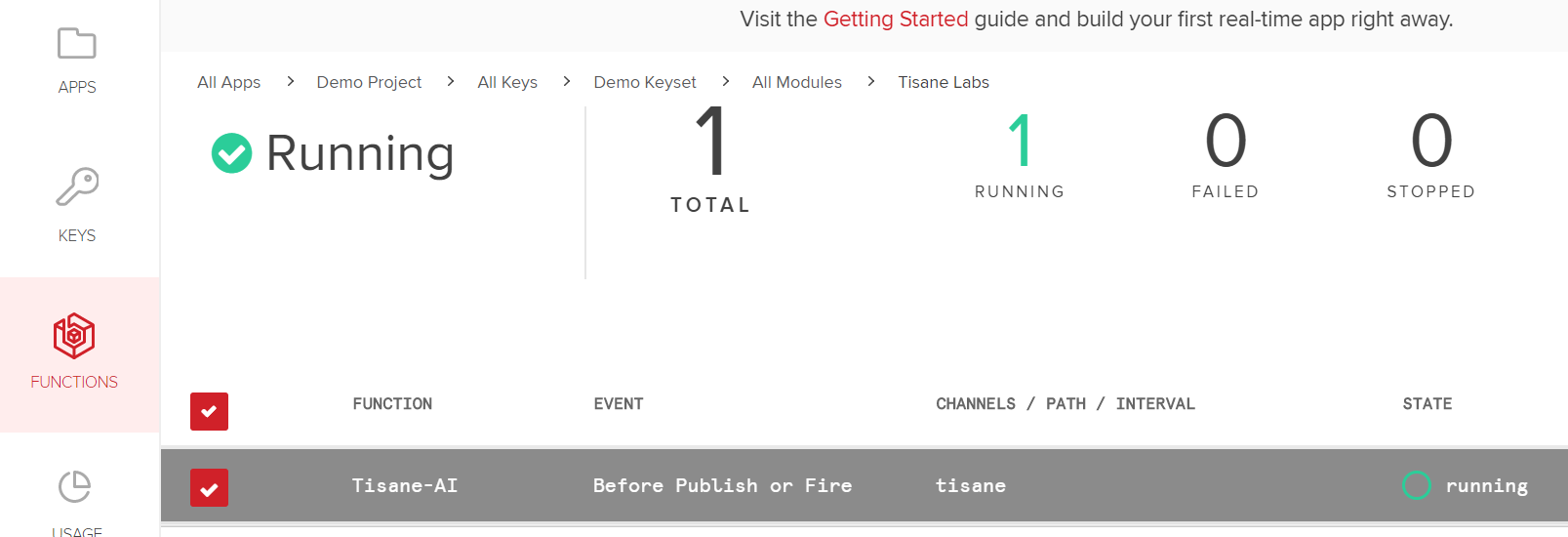

- Create a project and a module inside.

Add a function used by the Before Publish or Fire event.

Add a function used by the Before Publish or Fire event. - Use this Github Gist for the code, modifying it if needed.

- If the language is different from English and/or unknown, modify en to the standard code of the input language. For unknown languages, use * (asterisk) to request Tisane detect the language automatically.

Session-level Moderation Privileges

Statistically, the vast majority of violations are personal attacks (attacks targeting another participant in the chat). When the chat is structured with responses and threads, it’s easy to figure out who is the target of the attack. It’s usually the poster of the comment being replied to. If the chat is not structured, it is a poster of one of the recent messages.

Human moderators usually don’t like moderating game chats. It’s less about community building and more about banning users being nasty to each other. And you know what? Maybe they don’t have to. Since Tisane tags personal attacks, the privilege to moderate a post with a suspected personal attack in it, can be delegated to the suspected target(s). If it is not a personal attack, nothing will happen. If it is indeed an insult, then the offended party will have the power to discard the message, or maybe ban the poster. Note that it will only happen if Tisane suspects a personal attack.

The community both saves on the moderator manpower, and the potential offenders behave differently knowing that the person they attack will have the power to punish them. How often does one attack a moderator?

Of course, Tisane is not a panacea. It is merely a tool to maintain good experience. Like any natural language processing software, it may make mistakes. It is also intended to be a part of the process, not to substitute the process.

We recommend gaming communities to combine Tisane with low-tech, tried-and-true methods, such as considering whether the users whose post was tagged, is a long-standing member of the community with good record. When possible, drastic actions should be subject to a human review as well.

We also recommend taking different action depending on the context. Death threats or suicidal behavior should be treated differently than profanity. Scammers and potential sex predators should not be asked to rephrase their posts, they need to be quietly reported to the moderator.